Project :How to automatically copy data from AWS S3 – Lambda events

Hocalwire Labs Private Limited

1. Please establish two folders within the S3 Bucket, which should be named as follows:

- "original"

- "compressed"

2. It is requested that you develop a Lambda function to execute the subsequent actions:

a) Upon the upload of an image into the "original" folder, the Lambda function will be automatically activated.

b) The Lambda function will proceed to compress the uploaded image.

c) The compressed version of the image shall be duplicated and stored within the "compressed" folder.

Submission Requirements:

1. You are required to compile a document that provides guidance on the setup and configuration of the Lambda function.

2. The Lambda function's source code should be shared as part of the submission.

From this article you will learn how to create:

S3 bucket with appropriate permissions,

IAM policy for the IAM role,

IAM role and assign to it the appropriate policies,

Lambda function and assign the appropriate IAM role to it,

a mechanism to allow the lambda function to run automatically

How To Use S3 Trigger in AWS Lambda

1) S3 bucket

I will create 2 folders in S3 buckets. The first will be the source, from there the lambda function will copy files. The second will be the destination, there the files will be copied.

Just click ‘Create bucket’ and give it a name. Make sure you select a correct region. It is best not to enable public access without the need to ‘Block all public access’.

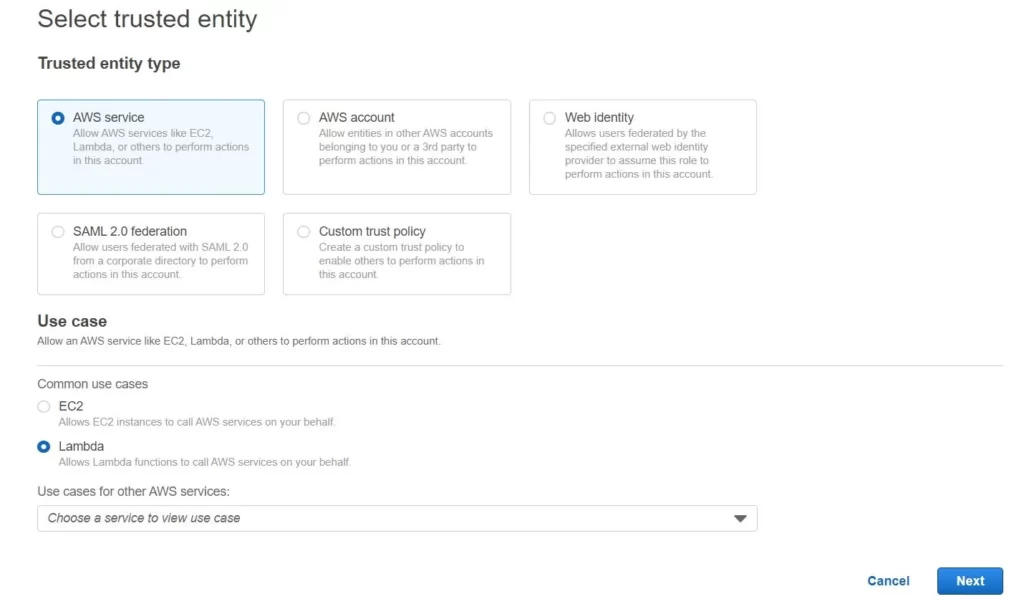

2) IAM role for Lambda

First, create a new IAM role for the lambda function. Appropriate policies should be added to the function.

Here I am giving all access for now

3) Lambda function

How to create a lambda function

Okay, now we come to the most important part, the creation of Lambda functions. I’m doing this from scratch, using Python 3.9 for this.

Remember to use the IAM role created in point number 2:

Lambda code to transfer our fils from one location to another.

import boto3

s3 = boto3.resource('s3')

def lambda_handler(event, context):

source_bucket_name = 'hocalwirelabsprivatelimitedproject'

source_folder_prefix = 'original/images/'

dest_bucket_name = 'hocalwirelabsprivatelimitedproject'

dest_folder_prefix = 'compressed/images/'

source_bucket = s3.Bucket(source_bucket_name)

dest_bucket = s3.Bucket(dest_bucket_name)

for obj in source_bucket.objects.filter(Prefix=source_folder_prefix, Delimiter='/'):

source_key = obj.key

dest_key = source_key.replace(source_folder_prefix, dest_folder_prefix, 1)

print('Copy file from ' + source_key + ' to ' + dest_key)

s3.Object(dest_bucket_name, dest_key).copy_from(CopySource={'Bucket': source_bucket_name, 'Key': source_key})

return {

'statusCode': 200,

'body': 'Image transfer from original to compressed folder successful!'

}

This is the trigger we need to attach for automation

You can modify the lambda function as you wish. I wanted to show that not all files from S3 have to be copied, it could just be a specific folder.

Make sure the function is working properly by clicking deploy and test. If you have done everything right, the files should be moved from to the target bucket and removed from the source bucket.

How to add automation to S3

Once you have made sure that everything is working correctly, then you can add the automation. In ‘Function overview‘, you click Add trigger:

Select S3 from the list and the name of your source bucket, that is where you will be adding files.

You set the event type to PUT because you only want the function to be triggered when objects are added.

Very important to add a prefix if you only want to copy files from a specific folder. Otherwise the function will be called every time anything is added to the bucket even in a different folder

Result

.